Review of Yoshua Bengio’s lecture at the Artificial General Intelligence 2021 Conference

At the 2021 Artificial General Intelligence Conference, a star keynote speaker was Yoshua Bengio. He has been one of the leading figures of deep learning with neural networks, for which he was granted the Turing Award last year.

He delivered a convincing argument for a new focus on explainability and explicit knowledge representation in the AI research community. The ultimate goal of this new trend, he argued, is artificial intelligence that is better at generalizing, adapting, and solving varied problems.

For anyone who develops and trains deep neural networks on a daily basis, this is an exciting and tempting proposition. Let’s follow Yoshua’s argument and try to get a glimpse into the next big thing in AI.

Psychology in AI

The first bold claim Yoshua made is that the state of AI has advanced enough to start applying human-level analysis. Such attempts were frowned upon earlier, but the advancement of current approaches has been such that there is a benefit to thinking about the model’s intelligence from a human perspective.

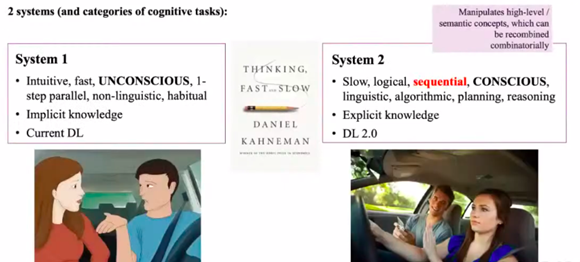

One such useful division is between System 1 and System 2 reasoning. This can be simplified as thinking fast (System 1), versus thinking slow (System 2). But how does it apply to Deep Learning (DL)?

Figure 1: System 1 versus System 2 thinking

In the context of a trained model, System 1 decision making is a process that does the job without being able to explain its reasoning. It happens without drawing on the facts, except in the sense that it demonstrably performs well. System 2 is the more involved process of consciously focusing, like driving in a new car, a new city, or even in a country where cars drive on the other side of the road.

Deep learning blindly trains a neural network on input and output data, updating weights in the network’s layers until it learns to generalize. This is System 1 thinking. Future approaches should complement this with memory, and the application of learned rules to explicitly reason about new situations. This will be System 2 deep learning.

We are already seeing this happen in deep translation models; networks which exhibit attention, such as the popular BERT model, outperform approaches without this ability.

Inductive Bias

Intelligence is the ability to gather information, save it, and to make use of it. So one element of intelligence is having a repository of knowledge. That’s where inductive biases come into play.

Inductive biases are underlying assumptions that are part of an approach. For example, we assume that the laws of physics are universal, while a computer vision model assumes that scenes of the world are translation-invariant. A person who’s able to understand a multitude of different concepts is deemed intelligent, and a similar definition can be applied to artificial models of reasoning. So intelligence isn’t just how much data you need before you get good, but how much data you need before you get good at something you haven’t seen before.

It may be tempting to try to do without inductive biases altogether, asking the deep learning model to pick up everything it needs as it goes. This approach is impractical, because it takes too long and too much data to get a decent model. Instead, Yoshua argues, we need to implement an inductive bias which optimizes the tradeoff between generalization and efficiency.

Neural Causal Models and Knowledge

So, why haven’t we done this yet? The recollection of previously seen information is extremely slow to train, because it’s hard to create a mapping between what’s been seen and what’s now relevant. For instance, how can a model learn that an intersection that it saw thousands of hours earlier is now relevant to knowing how to drive through a new one? Because links to memories are tenuous, they require some engineering to get started, as in the popular long short term memory (LSTM) recurrent architecture, where long-term memory is explicitly defined.

This can be achieved by making intervention local. By looking for small, repetitive patterns, future AI could recall similar instances of a situation it is facing, and use them to improve its decision making. This attractive possibility has yet to be attempted on a larger scale or to create results comparable to state-of-the-art techniques. Another tempting avenue is learning neural causal models with active interventions, where the network effectively dreams up possible outcomes of prior situations.

System 2 deep learning outlines a systematic approach to create models which can cope with out-of-distribution observations. Whatever the future holds, we are likely to see an emergence of complex models with the ability to explain why they made the decisions they did. Not only because it’s a helpful feedback mechanism for us to know how the models we have trained work, but because it will also improve the models themselves, bringing them closer to human reasoning through logic, memory, and imagination.